Overview

TRTCCloud

Copyright (c) 2021 Tencent. All rights reserved.

Module: TRTCCloud @ TXLiteAVSDK

Function: TRTC's main feature API

Version: 11.7

TRTCCloud

TRTCCloud

FuncList | DESC |

Create TRTCCloud instance (singleton mode) | |

Terminate TRTCCloud instance (singleton mode) | |

Add TRTC event callback | |

Remove TRTC event callback | |

Set the queue that drives the TRTCCloudListener event callback | |

Enter room | |

Exit room | |

Switch role | |

Switch role(support permission credential) | |

Switch room | |

Request cross-room call | |

Exit cross-room call | |

Set subscription mode (which must be set before room entry for it to take effect) | |

Create room subinstance (for concurrent multi-room listen/watch) | |

Terminate room subinstance | |

| |

Start publishing audio/video streams to Tencent Cloud CSS CDN | |

Stop publishing audio/video streams to Tencent Cloud CSS CDN | |

Start publishing audio/video streams to non-Tencent Cloud CDN | |

Stop publishing audio/video streams to non-Tencent Cloud CDN | |

Set the layout and transcoding parameters of On-Cloud MixTranscoding | |

Publish a stream | |

Modify publishing parameters | |

Stop publishing | |

Enable the preview image of local camera (mobile) | |

Update the preview image of local camera | |

Stop camera preview | |

Pause/Resume publishing local video stream | |

Set placeholder image during local video pause | |

Subscribe to remote user's video stream and bind video rendering control | |

Update remote user's video rendering control | |

Stop subscribing to remote user's video stream and release rendering control | |

Stop subscribing to all remote users' video streams and release all rendering resources | |

Pause/Resume subscribing to remote user's video stream | |

Pause/Resume subscribing to all remote users' video streams | |

Set the encoding parameters of video encoder | |

Set network quality control parameters | |

Set the rendering parameters of local video image | |

Set the rendering mode of remote video image | |

Enable dual-channel encoding mode with big and small images | |

Switch the big/small image of specified remote user | |

Screencapture video | |

Sets perspective correction coordinate points. | |

Set the adaptation mode of gravity sensing (version 11.7 and above) | |

Enable local audio capturing and publishing | |

Stop local audio capturing and publishing | |

Pause/Resume publishing local audio stream | |

Pause/Resume playing back remote audio stream | |

Pause/Resume playing back all remote users' audio streams | |

Set audio route | |

Set the audio playback volume of remote user | |

Set the capturing volume of local audio | |

Get the capturing volume of local audio | |

Set the playback volume of remote audio | |

Get the playback volume of remote audio | |

Enable volume reminder | |

Start audio recording | |

Stop audio recording | |

Start local media recording | |

Stop local media recording | |

Set the parallel strategy of remote audio streams | |

Enable 3D spatial effect | |

Update self position and orientation for 3D spatial effect | |

Update the specified remote user's position for 3D spatial effect | |

Set the maximum 3D spatial attenuation range for userId's audio stream | |

Get device management class (TXDeviceManager) | |

Get beauty filter management class (TXBeautyManager) | |

Add watermark | |

Get sound effect management class (TXAudioEffectManager) | |

Enable system audio capturing | |

Stop system audio capturing(iOS not supported) | |

Start screen sharing | |

Stop screen sharing | |

Pause screen sharing | |

Resume screen sharing | |

Set the video encoding parameters of screen sharing (i.e., substream) (for desktop and mobile systems) | |

Enable/Disable custom video capturing mode | |

Deliver captured video frames to SDK | |

Enable custom audio capturing mode | |

Deliver captured audio data to SDK | |

Enable/Disable custom audio track | |

Mix custom audio track into SDK | |

Set the publish volume and playback volume of mixed custom audio track | |

Generate custom capturing timestamp | |

Set video data callback for third-party beauty filters | |

Set the callback of custom rendering for local video | |

Set the callback of custom rendering for remote video | |

Set custom audio data callback | |

Set the callback format of audio frames captured by local mic | |

Set the callback format of preprocessed local audio frames | |

Set the callback format of audio frames to be played back by system | |

Enabling custom audio playback | |

Getting playable audio data | |

Use UDP channel to send custom message to all users in room | |

Use SEI channel to send custom message to all users in room | |

Start network speed test (used before room entry) | |

Stop network speed test | |

Get SDK version information | |

Set log output level | |

Enable/Disable console log printing | |

Enable/Disable local log compression | |

Set local log storage path | |

Set log callback | |

Display dashboard | |

Set dashboard margin | |

Call experimental APIs | |

Enable or disable private encryption of media streams |

sharedInstance

sharedInstance

TRTCCloud sharedInstance | (Context context) |

Create TRTCCloud instance (singleton mode)

Param | DESC |

context | It is only applicable to the Android platform. The SDK internally converts it into the ApplicationContext of Android to call the Android system API. |

Note

1. If you use

delete ITRTCCloud* , a compilation error will occur. Please use destroyTRTCCloud to release the object pointer.2. On Windows, macOS, or iOS, please call the

getTRTCShareInstance() API.3. On Android, please call the

getTRTCShareInstance(void *context) API.destroySharedInstance

destroySharedInstance

Terminate TRTCCloud instance (singleton mode)

addListener

addListener

void addListener |

Add TRTC event callback

You can use TRTCCloudListener to get various event notifications from the SDK, such as error codes, warning codes, and audio/video status parameters.

removeListener

setListenerHandler

setListenerHandler

void setListenerHandler | (Handler listenerHandler) |

Set the queue that drives the TRTCCloudListener event callback

If you do not specify a

listenerHandler , the SDK will use MainQueue as the queue for driving TRTCCloudListener event callbacks by default.In other words, if you do not set the

listenerHandler attribute, all callback functions in TRTCCloudListener will be driven by MainQueue .Param | DESC |

listenerHandler | |

Note

If you specify a

listenerHandler , please do not manipulate the UI in the TRTCCloudListener callback function; otherwise, thread safety issues will occur.enterRoom

enterRoom

void enterRoom | |

| int scene) |

Enter room

All TRTC users need to enter a room before they can "publish" or "subscribe to" audio/video streams. "Publishing" refers to pushing their own streams to the cloud, and "subscribing to" refers to pulling the streams of other users in the room from the cloud.

When calling this API, you need to specify your application scenario (TRTCAppScene) to get the best audio/video transfer experience. We provide the following four scenarios for your choice:

Video call scenario. Use cases: [one-to-one video call], [video conferencing with up to 300 participants], [online medical diagnosis], [small class], [video interview], etc.

In this scenario, each room supports up to 300 concurrent online users, and up to 50 of them can speak simultaneously.

Audio call scenario. Use cases: [one-to-one audio call], [audio conferencing with up to 300 participants], [audio chat], [online Werewolf], etc.

In this scenario, each room supports up to 300 concurrent online users, and up to 50 of them can speak simultaneously.

Live streaming scenario. Use cases: [low-latency video live streaming], [interactive classroom for up to 100,000 participants], [live video competition], [video dating room], [remote training], [large-scale conferencing], etc.

In this scenario, each room supports up to 100,000 concurrent online users, but you should specify the user roles: anchor (TRTCRoleAnchor) or audience (TRTCRoleAudience).

Audio chat room scenario. Use cases: [Clubhouse], [online karaoke room], [music live room], [FM radio], etc.

In this scenario, each room supports up to 100,000 concurrent online users, but you should specify the user roles: anchor (TRTCRoleAnchor) or audience (TRTCRoleAudience).

If room entry succeeded, the

result parameter will be a positive number ( result > 0), indicating the time in milliseconds (ms) between function call and room entry. If room entry failed, the

result parameter will be a negative number ( result < 0), indicating the TXLiteAVError for room entry failure.Param | DESC |

param | Room entry parameter, which is used to specify the user's identity, role, authentication credentials, and other information. For more information, please see TRTCParams. |

scene | Application scenario, which is used to specify the use case. The same TRTCAppScene should be configured for all users in the same room. |

Note

1. If

scene is specified as TRTCAppSceneLIVE or TRTCAppSceneVoiceChatRoom, you must use the role field in TRTCParams to specify the role of the current user in the room.2. The same

scene should be configured for all users in the same room.exitRoom

exitRoom

Exit room

Calling this API will allow the user to leave the current audio or video room and release the camera, mic, speaker, and other device resources.

After resources are released, the SDK will use the

onExitRoom() callback in TRTCCloudListener to notify you.

If you need to call enterRoom again or switch to the SDK of another provider, we recommend you wait until you receive the

onExitRoom() callback, so as to avoid the problem of the camera or mic being occupied.switchRole

switchRole

void switchRole | (int role) |

Switch role

This API is used to switch the user role between

anchor and audience .

As video live rooms and audio chat rooms need to support an audience of up to 100,000 concurrent online users, the rule "only anchors can publish their audio/video streams" has been set. Therefore, when some users want to publish their streams (so that they can interact with anchors), they need to switch their role to "anchor" first.

You can use the

role field in TRTCParams during room entry to specify the user role in advance or use the switchRole API to switch roles after room entry.Param | DESC |

role | Role, which is anchor by default: TRTCRoleAnchor: anchor, who can publish their audio/video streams. Up to 50 anchors are allowed to publish streams at the same time in one room. TRTCRoleAudience: audience, who cannot publish their audio/video streams, but can only watch streams of anchors in the room. If they want to publish their streams, they need to switch to the "anchor" role first through switchRole. One room supports an audience of up to 100,000 concurrent online users. |

Note

1. This API is only applicable to two scenarios: live streaming (TRTC_APP_SCENE_LIVE) and audio chat room (TRTC_APP_SCENE_VOICE_CHATROOM).

2. If the

scene you specify in enterRoom is TRTC_APP_SCENE_VIDEOCALL or TRTC_APP_SCENE_AUDIOCALL, please do not call this API.switchRole

switchRole

void switchRole | (int role |

| final String privateMapKey) |

Switch role(support permission credential)

This API is used to switch the user role between

anchor and audience .

As video live rooms and audio chat rooms need to support an audience of up to 100,000 concurrent online users, the rule "only anchors can publish their audio/video streams" has been set. Therefore, when some users want to publish their streams (so that they can interact with anchors), they need to switch their role to "anchor" first.

You can use the

role field in TRTCParams during room entry to specify the user role in advance or use the switchRole API to switch roles after room entry.Param | DESC |

privateMapKey | Permission credential used for permission control. If you want only users with the specified userId values to enter a room or push streams, you need to use privateMapKey to restrict the permission. We recommend you use this parameter only if you have high security requirements. For more information, please see Enabling Advanced Permission Control. |

role | Role, which is anchor by default: TRTCRoleAnchor: anchor, who can publish their audio/video streams. Up to 50 anchors are allowed to publish streams at the same time in one room. TRTCRoleAudience: audience, who cannot publish their audio/video streams, but can only watch streams of anchors in the room. If they want to publish their streams, they need to switch to the "anchor" role first through switchRole. One room supports an audience of up to 100,000 concurrent online users. |

Note

1. This API is only applicable to two scenarios: live streaming (TRTCAppSceneLIVE) and audio chat room (TRTCAppSceneVoiceChatRoom).

2. If the

scene you specify in enterRoom is TRTCAppSceneVideoCall or TRTCAppSceneAudioCall, please do not call this API.switchRoom

switchRoom

void switchRoom |

Switch room

This API is used to quickly switch a user from one room to another.

If the user's role is

audience , calling this API is equivalent to exitRoom (current room) + enterRoom (new room). If the user's role is

anchor , the API will retain the current audio/video publishing status while switching the room; therefore, during the room switch, camera preview and sound capturing will not be interrupted.

This API is suitable for the online education scenario where the supervising teacher can perform fast room switch across multiple rooms. In this scenario, using

switchRoom can get better smoothness and use less code than exitRoom + enterRoom .The API call result will be called back through

onSwitchRoom(errCode, errMsg) in TRTCCloudListener.Param | DESC |

config |

Note

Due to the requirement for compatibility with legacy versions of the SDK, the

config parameter contains both roomId and strRoomId parameters. You should pay special attention as detailed below when specifying these two parameters:1. If you decide to use

strRoomId , then set roomId to 0. If both are specified, roomId will be used.2. All rooms need to use either

strRoomId or roomId at the same time. They cannot be mixed; otherwise, there will be many unexpected bugs.ConnectOtherRoom

ConnectOtherRoom

void ConnectOtherRoom | (String param) |

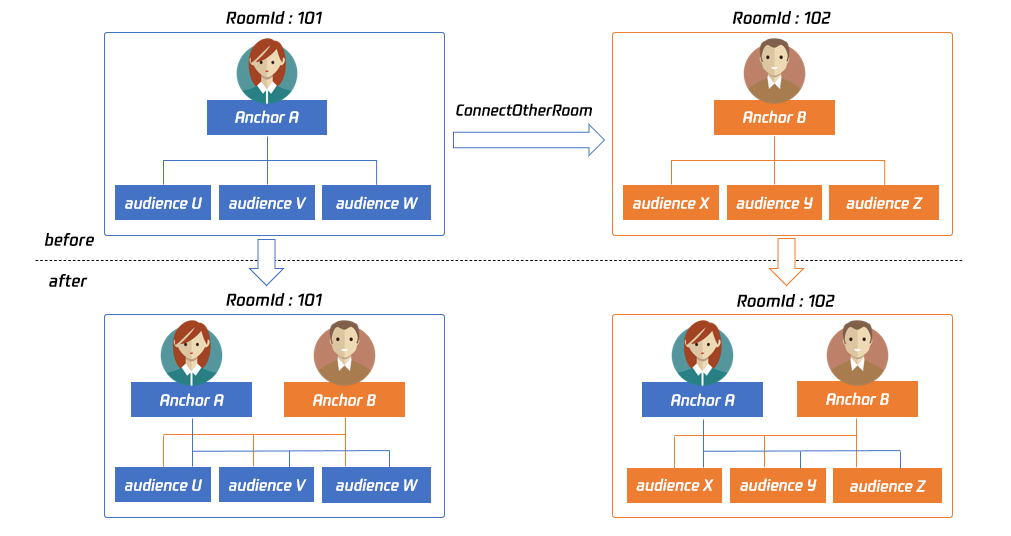

Request cross-room call

By default, only users in the same room can make audio/video calls with each other, and the audio/video streams in different rooms are isolated from each other.

However, you can publish the audio/video streams of an anchor in another room to the current room by calling this API. At the same time, this API will also publish the local audio/video streams to the target anchor's room.

In other words, you can use this API to share the audio/video streams of two anchors in two different rooms, so that the audience in each room can watch the streams of these two anchors. This feature can be used to implement anchor competition.

The result of requesting cross-room call will be returned through the onConnectOtherRoom callback in TRTCCloudDelegate.

For example, after anchor A in room "101" uses

connectOtherRoom() to successfully call anchor B in room "102": All users in room "101" will receive the

onRemoteUserEnterRoom(B) and onUserVideoAvailable(B,true) event callbacks of anchor B; that is, all users in room "101" can subscribe to the audio/video streams of anchor B. All users in room "102" will receive the

onRemoteUserEnterRoom(A) and onUserVideoAvailable(A,true) event callbacks of anchor A; that is, all users in room "102" can subscribe to the audio/video streams of anchor A.

For compatibility with subsequent extended fields for cross-room call, parameters in JSON format are used currently.

Case 1: numeric room ID

If anchor A in room "101" wants to co-anchor with anchor B in room "102", then anchor A needs to pass in {"roomId": 102, "userId": "userB"} when calling this API.

Below is the sample code:

JSONObject jsonObj = new JSONObject();jsonObj.put("roomId", 102);jsonObj.put("userId", "userB");trtc.ConnectOtherRoom(jsonObj.toString());

Case 2: string room ID

If you use a string room ID, please be sure to replace the

roomId in JSON with strRoomId , such as {"strRoomId": "102", "userId": "userB"}Below is the sample code:

JSONObject jsonObj = new JSONObject();jsonObj.put("strRoomId", "102");jsonObj.put("userId", "userB");trtc.ConnectOtherRoom(jsonObj.toString());

Param | DESC |

param | You need to pass in a string parameter in JSON format: roomId represents the room ID in numeric format, strRoomId represents the room ID in string format, and userId represents the user ID of the target anchor. |

DisconnectOtherRoom

DisconnectOtherRoom

Exit cross-room call

The result will be returned through the

onDisconnectOtherRoom() callback in TRTCCloudDelegate.setDefaultStreamRecvMode

setDefaultStreamRecvMode

void setDefaultStreamRecvMode | (boolean autoRecvAudio |

| boolean autoRecvVideo) |

Set subscription mode (which must be set before room entry for it to take effect)

You can switch between the "automatic subscription" and "manual subscription" modes through this API:

Automatic subscription: this is the default mode, where the user will immediately receive the audio/video streams in the room after room entry, so that the audio will be automatically played back, and the video will be automatically decoded (you still need to bind the rendering control through the

startRemoteView API). Manual subscription: after room entry, the user needs to manually call the startRemoteView API to start subscribing to and decoding the video stream and call the muteRemoteAudio (false) API to start playing back the audio stream.

In most scenarios, users will subscribe to the audio/video streams of all anchors in the room after room entry. Therefore, TRTC adopts the automatic subscription mode by default in order to achieve the best "instant streaming experience".

In your application scenario, if there are many audio/video streams being published at the same time in each room, and each user only wants to subscribe to 1–2 streams of them, we recommend you use the "manual subscription" mode to reduce the traffic costs.

Param | DESC |

autoRecvAudio | true: automatic subscription to audio; false: manual subscription to audio by calling muteRemoteAudio(false) . Default value: true |

autoRecvVideo | true: automatic subscription to video; false: manual subscription to video by calling startRemoteView . Default value: true |

Note

1. The configuration takes effect only if this API is called before room entry (enterRoom).

2. In the automatic subscription mode, if the user does not call startRemoteView to subscribe to the video stream after room entry, the SDK will automatically stop subscribing to the video stream in order to reduce the traffic consumption.

createSubCloud

createSubCloud

Create room subinstance (for concurrent multi-room listen/watch)

TRTCCloud was originally designed to work in the singleton mode, which limited the ability to watch concurrently in multiple rooms.By calling this API, you can create multiple

TRTCCloud instances, so that you can enter multiple different rooms at the same time to listen/watch audio/video streams.

However, it should be noted that your ability to publish audio and video streams in multiple

TRTCCloud instances will be limited.

This feature is mainly used in the "super small class" use case in the online education scenario to break the limit that "only up to 50 users can publish their audio/video streams simultaneously in one TRTC room".

Below is the sample code:

//In the small room that needs interaction, enter the room as an anchor and push audio and video streamsTRTCCloud mainCloud = TRTCCloud.sharedInstance(mContext);TRTCCloudDef.TRTCParams mainParams = new TRTCCloudDef.TRTCParams();//Fill your paramsmainParams.role = TRTCCloudDef.TRTCRoleAnchor;mainCloud.enterRoom(mainParams, TRTCCloudDef.TRTC_APP_SCENE_LIVE);//...mainCloud.startLocalPreview(true, videoView);mainCloud.startLocalAudio(TRTCCloudDef.TRTC_AUDIO_QUALITY_DEFAULT);//In the large room that only needs to watch, enter the room as an audience and pull audio and video streamsTRTCCloud subCloud = mainCloud.createSubCloud();TRTCCloudDef.TRTCParams subParams = new TRTCCloudDef.TRTCParams();//Fill your paramssubParams.role = TRTCCloudDef.TRTCRoleAudience;subCloud.enterRoom(subParams, TRTCCloudDef.TRTC_APP_SCENE_LIVE);//...subCloud.startRemoteView(userId, TRTCCloudDef.TRTC_VIDEO_STREAM_TYPE_BIG, view);//...//Exit from new room and release it.subCloud.exitRoom();mainCloud.destroySubCloud(subCloud);

Note

The same user can enter multiple rooms with different

roomId values by using the same userId . Two devices cannot use the same

userId to enter the same room with a specified roomId . You can set TRTCCloudListener separately for different instances to get their own event notifications.

The same user can push streams in multiple

TRTCCloud instances at the same time, and can also call APIs related to local audio/video in the sub instance. But need to pay attention to: Audio needs to be collected by the microphone or custom data at the same time in all instances, and the result of API calls related to the audio device will be based on the last time;

The result of camera-related API call will be based on the last time: startLocalPreview.

Return Desc:

TRTCCloud subinstancedestroySubCloud

destroySubCloud

void destroySubCloud |

Terminate room subinstance

Param | DESC |

subCloud | |

startPublishing

startPublishing

void startPublishing | (final String streamId |

| final int streamType) |

Start publishing audio/video streams to Tencent Cloud CSS CDN

This API sends a command to the TRTC server, requesting it to relay the current user's audio/video streams to CSS CDN.

You can set the

StreamId of the live stream through the streamId parameter, so as to specify the playback address of the user's audio/video streams on CSS CDN.

For example, if you specify the current user's live stream ID as

user_stream_001 through this API, then the corresponding CDN playback address is:"http://yourdomain/live/user_stream_001.flv", where

yourdomain is your playback domain name with an ICP filing.

You can configure your playback domain name in the CSS console. Tencent Cloud does not provide a default playback domain name.

You can also specify the

streamId when setting the TRTCParams parameter of enterRoom , which is the recommended approach.Param | DESC |

streamId | Custom stream ID. |

streamType | Only TRTCVideoStreamTypeBig and TRTCVideoStreamTypeSub are supported. |

Note

You need to enable the "Enable Relayed Push" option on the "Function Configuration" page in the TRTC console in advance.

If you select "Specified stream for relayed push", you can use this API to push the corresponding audio/video stream to Tencent Cloud CDN and specify the entered stream ID.

If you select "Global auto-relayed push", you can use this API to adjust the default stream ID.

stopPublishing

stopPublishing

Stop publishing audio/video streams to Tencent Cloud CSS CDN

startPublishCDNStream

startPublishCDNStream

void startPublishCDNStream |

Start publishing audio/video streams to non-Tencent Cloud CDN

This API is similar to the

startPublishing API. The difference is that startPublishing can only publish audio/video streams to Tencent Cloud CDN, while this API can relay streams to live streaming CDN services of other cloud providers.Param | DESC |

param |

Note

Using the

startPublishing API to publish audio/video streams to Tencent Cloud CSS CDN does not incur additional fees. Using the

startPublishCDNStream API to publish audio/video streams to non-Tencent Cloud CDN incurs additional relaying bandwidth fees.stopPublishCDNStream

stopPublishCDNStream

Stop publishing audio/video streams to non-Tencent Cloud CDN

setMixTranscodingConfig

setMixTranscodingConfig

void setMixTranscodingConfig |

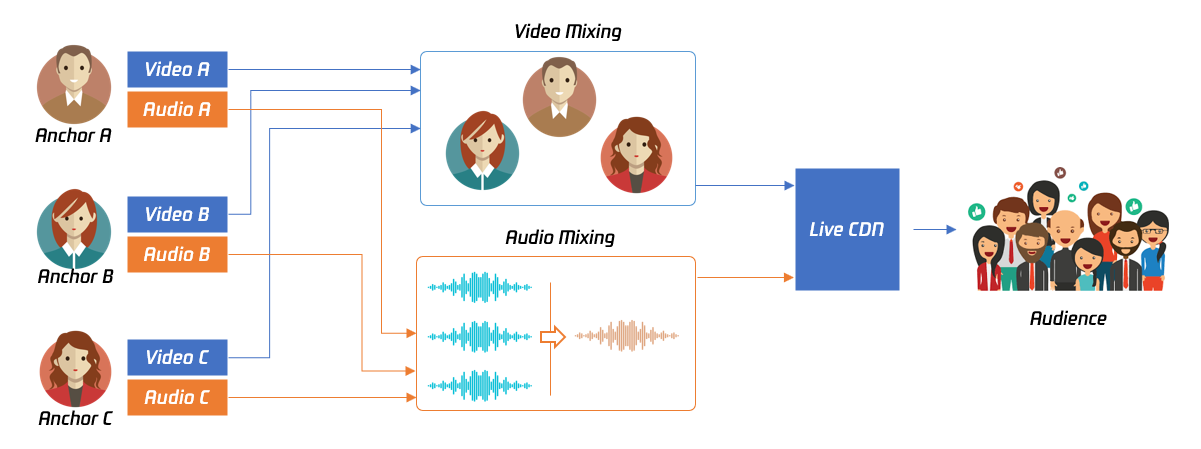

Set the layout and transcoding parameters of On-Cloud MixTranscoding

In a live room, there may be multiple anchors publishing their audio/video streams at the same time, but for audience on CSS CDN, they only need to watch one video stream in HTTP-FLV or HLS format.

When you call this API, the SDK will send a command to the TRTC mixtranscoding server to combine multiple audio/video streams in the room into one stream.

You can use the TRTCTranscodingConfig parameter to set the layout of each channel of image. You can also set the encoding parameters of the mixed audio/video streams.

Param | DESC |

config | If config is not empty, On-Cloud MixTranscoding will be started; otherwise, it will be stopped. For more information, please see TRTCTranscodingConfig. |

Note

Notes on On-Cloud MixTranscoding:

Mixed-stream transcoding is a chargeable function, calling the interface will incur cloud-based mixed-stream transcoding fees, see Billing of On-Cloud MixTranscoding.

If the user calling this API does not set

streamId in the config parameter, TRTC will mix the multiple channels of images in the room into the audio/video streams corresponding to the current user, i.e., A + B => A. If the user calling this API sets

streamId in the config parameter, TRTC will mix the multiple channels of images in the room into the specified streamId , i.e., A + B => streamId. Please note that if you are still in the room but do not need mixtranscoding anymore, be sure to call this API again and leave

config empty to cancel it; otherwise, additional fees may be incurred. Please rest assured that TRTC will automatically cancel the mixtranscoding status upon room exit.

startPublishMediaStream

startPublishMediaStream

void startPublishMediaStream | |

| |

|

Publish a stream

After this API is called, the TRTC server will relay the stream of the local user to a CDN (after transcoding or without transcoding), or transcode and publish the stream to a TRTC room.

Param | DESC |

config | The On-Cloud MixTranscoding settings. This parameter is invalid in the relay-to-CDN mode. It is required if you transcode and publish the stream to a CDN or to a TRTC room. For details, see TRTCStreamMixingConfig. |

params | The encoding settings. This parameter is required if you transcode and publish the stream to a CDN or to a TRTC room. If you relay to a CDN without transcoding, to improve the relaying stability and playback compatibility, we also recommend you set this parameter. For details, see TRTCStreamEncoderParam. |

target | The publishing destination. You can relay the stream to a CDN (after transcoding or without transcoding) or transcode and publish the stream to a TRTC room. For details, see TRTCPublishTarget. |

Note

2. You can start a publishing task only once and cannot initiate two tasks that use the same publishing mode and publishing cdn url. Note the task ID returned, which you need to pass to updatePublishMediaStream to modify the publishing parameters or stopPublishMediaStream to stop the task.

3. You can specify up to 10 CDN URLs in

target . You will be charged only once for transcoding even if you relay to multiple CDNs.4. To avoid causing errors, do not specify the same URLs for different publishing tasks executed at the same time. We recommend you add "sdkappid_roomid_userid_main" to URLs to distinguish them from one another and avoid application conflicts.

updatePublishMediaStream

updatePublishMediaStream

void updatePublishMediaStream | (final String taskId |

| |

| |

|

Modify publishing parameters

You can use this API to change the parameters of a publishing task initiated by startPublishMediaStream.

Param | DESC |

config | The On-Cloud MixTranscoding settings. This parameter is invalid in the relay-to-CDN mode. It is required if you transcode and publish the stream to a CDN or to a TRTC room. For details, see TRTCStreamMixingConfig. |

params | The encoding settings. This parameter is required if you transcode and publish the stream to a CDN or to a TRTC room. If you relay to a CDN without transcoding, to improve the relaying stability and playback compatibility, we recommend you set this parameter. For details, see TRTCStreamEncoderParam. |

target | The publishing destination. You can relay the stream to a CDN (after transcoding or without transcoding) or transcode and publish the stream to a TRTC room. For details, see TRTCPublishTarget. |

taskId |

Note

1. You can use this API to add or remove CDN URLs to publish to (you can publish to up to 10 CDNs at a time). To avoid causing errors, do not specify the same URLs for different tasks executed at the same time.

2. You can use this API to switch a relaying task to transcoding or vice versa. For example, in cross-room communication, you can first call startPublishMediaStream to relay to a CDN. When the anchor requests cross-room communication, call this API, passing in the task ID to switch the relaying task to a transcoding task. This can ensure that the live stream and CDN playback are not interrupted (you need to keep the encoding parameters consistent).

3. You can not switch output between "only audio" 、 "only video" and "audio and video" for the same task.

stopPublishMediaStream

stopPublishMediaStream

void stopPublishMediaStream | (final String taskId) |

Stop publishing

Param | DESC |

taskId |

Note

1. If the task ID is not saved to your backend, you can call startPublishMediaStream again when an anchor re-enters the room after abnormal exit. The publishing will fail, but the TRTC backend will return the task ID to you.

2. If

taskId is left empty, the TRTC backend will end all tasks you started through startPublishMediaStream. You can leave it empty if you have started only one task or want to stop all publishing tasks started by you.startLocalPreview

startLocalPreview

void startLocalPreview | (boolean frontCamera |

| TXCloudVideoView view) |

Enable the preview image of local camera (mobile)

If this API is called before

enterRoom , the SDK will only enable the camera and wait until enterRoom is called before starting push.If it is called after

enterRoom , the SDK will enable the camera and automatically start pushing the video stream.When the first camera video frame starts to be rendered, you will receive the

onCameraDidReady callback in TRTCCloudListener.Param | DESC |

frontCamera | true: front camera; false: rear camera |

view | Control that carries the video image |

Note

If you want to preview the camera image and adjust the beauty filter parameters through

BeautyManager before going live, you can: Scheme 1. Call

startLocalPreview before calling enterRoom Scheme 2. Call

startLocalPreview and muteLocalVideo(true) after calling enterRoom updateLocalView

updateLocalView

void updateLocalView | (TXCloudVideoView view) |

Update the preview image of local camera

stopLocalPreview

stopLocalPreview

Stop camera preview

muteLocalVideo

muteLocalVideo

void muteLocalVideo | (int streamType |

| boolean mute) |

Pause/Resume publishing local video stream

This API can pause (or resume) publishing the local video image. After the pause, other users in the same room will not be able to see the local image.

This API is equivalent to the two APIs of

startLocalPreview/stopLocalPreview when TRTCVideoStreamTypeBig is specified, but has higher performance and response speed.The

startLocalPreview/stopLocalPreview APIs need to enable/disable the camera, which are hardware device-related operations, so they are very time-consuming.In contrast,

muteLocalVideo only needs to pause or allow the data stream at the software level, so it is more efficient and more suitable for scenarios where frequent enabling/disabling are needed.

After local video publishing is paused, other members in the same room will receive the

onUserVideoAvailable(userId, false) callback notification.After local video publishing is resumed, other members in the same room will receive the

onUserVideoAvailable(userId, true) callback notification.Param | DESC |

mute | true: pause; false: resume |

streamType | Specify for which video stream to pause (or resume). Only TRTCVideoStreamTypeBig and TRTCVideoStreamTypeSub are supported |

setVideoMuteImage

setVideoMuteImage

void setVideoMuteImage | (Bitmap image |

| int fps) |

Set placeholder image during local video pause

When you call

muteLocalVideo(true) to pause the local video image, you can set a placeholder image by calling this API. Then, other users in the room will see this image instead of a black screen.Param | DESC |

fps | Frame rate of the placeholder image. Minimum value: 5. Maximum value: 10. Default value: 5 |

image | Placeholder image. A null value means that no more video stream data will be sent after muteLocalVideo . The default value is null. |

startRemoteView

startRemoteView

void startRemoteView | (String userId |

| int streamType |

| TXCloudVideoView view) |

Subscribe to remote user's video stream and bind video rendering control

Calling this API allows the SDK to pull the video stream of the specified

userId and render it to the rendering control specified by the view parameter. You can set the display mode of the video image through setRemoteRenderParams. If you already know the

userId of a user who has a video stream in the room, you can directly call startRemoteView to subscribe to the user's video image. If you don't know which users in the room are publishing video streams, you can wait for the notification from onUserVideoAvailable after

enterRoom .

Calling this API only starts pulling the video stream, and the image needs to be loaded and buffered at this time. After the buffering is completed, you will receive a notification from onFirstVideoFrame.

Param | DESC |

streamType | Video stream type of the userId specified for watching: HD big image: TRTCVideoStreamTypeBig Smooth small image: TRTCVideoStreamTypeSmall (the remote user should enable dual-channel encoding through enableEncSmallVideoStream for this parameter to take effect) Substream image (usually used for screen sharing): TRTCVideoStreamTypeSub |

userId | ID of the specified remote user |

view | Rendering control that carries the video image |

Note

The following requires your attention:

1. The SDK supports watching the big image and substream image or small image and substream image of a

userId at the same time, but does not support watching the big image and small image at the same time.2. Only when the specified

userId enables dual-channel encoding through enableEncSmallVideoStream can the user's small image be viewed.3. If the small image of the specified

userId does not exist, the SDK will switch to the big image of the user by default.updateRemoteView

updateRemoteView

void updateRemoteView | (String userId |

| int streamType |

| TXCloudVideoView view) |

Update remote user's video rendering control

This API can be used to update the rendering control of the remote video image. It is often used in interactive scenarios where the display area needs to be switched.

Param | DESC |

streamType | Type of the stream for which to set the preview window (only TRTCVideoStreamTypeBig and TRTCVideoStreamTypeSub are supported) |

userId | ID of the specified remote user |

view | Control that carries the video image |

stopRemoteView

stopRemoteView

void stopRemoteView | (String userId |

| int streamType) |

Stop subscribing to remote user's video stream and release rendering control

Calling this API will cause the SDK to stop receiving the user's video stream and release the decoding and rendering resources for the stream.

Param | DESC |

streamType | Video stream type of the userId specified for watching: HD big image: TRTCVideoStreamTypeBig Smooth small image: TRTCVideoStreamTypeSmall Substream image (usually used for screen sharing): TRTCVideoStreamTypeSub |

userId | ID of the specified remote user |

stopAllRemoteView

stopAllRemoteView

Stop subscribing to all remote users' video streams and release all rendering resources

Calling this API will cause the SDK to stop receiving all remote video streams and release all decoding and rendering resources.

Note

If a substream image (screen sharing) is being displayed, it will also be stopped.

muteRemoteVideoStream

muteRemoteVideoStream

void muteRemoteVideoStream | (String userId |

| int streamType |

| boolean mute) |

Pause/Resume subscribing to remote user's video stream

This API only pauses/resumes receiving the specified user's video stream but does not release displaying resources; therefore, the video image will freeze at the last frame before it is called.

Param | DESC |

mute | Whether to pause receiving |

streamType | Specify for which video stream to pause (or resume): HD big image: TRTCVideoStreamTypeBig Smooth small image: TRTCVideoStreamTypeSmall Substream image (usually used for screen sharing): TRTCVideoStreamTypeSub |

userId | ID of the specified remote user |

Note

This API can be called before room entry (enterRoom), and the pause status will be reset after room exit (exitRoom).

After calling this API to pause receiving the video stream from a specific user, simply calling the startRemoteView API will not be able to play the video from that user. You need to call muteRemoteVideoStream(false) or muteAllRemoteVideoStreams(false) to resume it.

muteAllRemoteVideoStreams

muteAllRemoteVideoStreams

void muteAllRemoteVideoStreams | (boolean mute) |

Pause/Resume subscribing to all remote users' video streams

This API only pauses/resumes receiving all users' video streams but does not release displaying resources; therefore, the video image will freeze at the last frame before it is called.

Param | DESC |

mute | Whether to pause receiving |

Note

This API can be called before room entry (enterRoom), and the pause status will be reset after room exit (exitRoom).

After calling this interface to pause receiving video streams from all users, simply calling the startRemoteView interface will not be able to play the video from a specific user. You need to call muteRemoteVideoStream(false) or muteAllRemoteVideoStreams(false) to resume it.

setVideoEncoderParam

setVideoEncoderParam

void setVideoEncoderParam |

Set the encoding parameters of video encoder

This setting can determine the quality of image viewed by remote users, which is also the image quality of on-cloud recording files.

Param | DESC |

param | It is used to set relevant parameters for the video encoder. For more information, please see TRTCVideoEncParam. |

Note

Begin from v11.5 version, the encoding output resolution will be aligned according to width 8 and height 2 bytes, and will be adjusted downward, eg: input resolution 540x960, actual encoding output resolution 536x960.

setNetworkQosParam

setNetworkQosParam

void setNetworkQosParam |

Set network quality control parameters

This setting determines the quality control policy in a poor network environment, such as "image quality preferred" or "smoothness preferred".

Param | DESC |

param | It is used to set relevant parameters for network quality control. For details, please refer to TRTCNetworkQosParam. |

setLocalRenderParams

setLocalRenderParams

void setLocalRenderParams |

Set the rendering parameters of local video image

The parameters that can be set include video image rotation angle, fill mode, and mirror mode.

Param | DESC |

params |

setRemoteRenderParams

setRemoteRenderParams

void setRemoteRenderParams | (String userId |

| int streamType |

|

Set the rendering mode of remote video image

The parameters that can be set include video image rotation angle, fill mode, and mirror mode.

Param | DESC |

params | |

streamType | It can be set to the primary stream image (TRTCVideoStreamTypeBig) or substream image (TRTCVideoStreamTypeSub). |

userId | ID of the specified remote user |

enableEncSmallVideoStream

enableEncSmallVideoStream

int enableEncSmallVideoStream | (boolean enable |

|

Enable dual-channel encoding mode with big and small images

In this mode, the current user's encoder will output two channels of video streams, i.e., HD big image and Smooth small image, at the same time (only one channel of audio stream will be output though).

In this way, other users in the room can choose to subscribe to the HD big image or Smooth small image according to their own network conditions or screen size.

Param | DESC |

enable | Whether to enable small image encoding. Default value: false |

smallVideoEncParam | Video parameters of small image stream |

Note

Dual-channel encoding will consume more CPU resources and network bandwidth; therefore, this feature can be enabled on macOS, Windows, or high-spec tablets, but is not recommended for phones.

Return Desc:

0: success; -1: the current big image has been set to a lower quality, and it is not necessary to enable dual-channel encoding

setRemoteVideoStreamType

setRemoteVideoStreamType

int setRemoteVideoStreamType | (String userId |

| int streamType) |

Switch the big/small image of specified remote user

After an anchor in a room enables dual-channel encoding, the video image that other users in the room subscribe to through startRemoteView will be HD big image by default.

You can use this API to select whether the image subscribed to is the big image or small image. The API can take effect before or after startRemoteView is called.

Param | DESC |

streamType | Video stream type, i.e., big image or small image. Default value: big image |

userId | ID of the specified remote user |

Note

To implement this feature, the target user must have enabled the dual-channel encoding mode through enableEncSmallVideoStream; otherwise, this API will not work.

snapshotVideo

snapshotVideo

void snapshotVideo | (String userId |

| int streamType |

| int sourceType |

|

Screencapture video

You can use this API to screencapture the local video image or the primary stream image and substream (screen sharing) image of a remote user.

Param | DESC |

sourceType | Video image source, which can be the video stream image (TRTCSnapshotSourceTypeStream, generally in higher definition) or the video rendering image (TRTCSnapshotSourceTypeView) |

streamType | Video stream type, which can be the primary stream image (TRTCVideoStreamTypeBig, generally for camera) or substream image (TRTCVideoStreamTypeSub, generally for screen sharing) |

userId | User ID. A null value indicates to screencapture the local video. |

Note

On Windows, only video image from the TRTCSnapshotSourceTypeStream source can be screencaptured currently.

setPerspectiveCorrectionPoints

setPerspectiveCorrectionPoints

void setPerspectiveCorrectionPoints | (String userId |

| PointF[] srcPoints |

| PointF[] dstPoints) |

Sets perspective correction coordinate points.

This function allows you to specify coordinate areas for perspective correction.

Param | DESC |

dstPoints | The coordinates of the four vertices of the target corrected area should be passed in the order of top-left, bottom-left, top-right, bottom-right. All coordinates need to be normalized to the [0,1] range based on the render view width and height, or null to stop perspective correction of the corresponding stream. |

srcPoints | The coordinates of the four vertices of the original stream image area should be passed in the order of top-left, bottom-left, top-right, bottom-right. All coordinates need to be normalized to the [0,1] range based on the render view width and height, or null to stop perspective correction of the corresponding stream. |

userId | userId which corresponding to the target stream. If null value is specified, it indicates that the function is applied to the local stream. |

setGravitySensorAdaptiveMode

setGravitySensorAdaptiveMode

void setGravitySensorAdaptiveMode | (int mode) |

Set the adaptation mode of gravity sensing (version 11.7 and above)

After turning on gravity sensing, if the device on the collection end rotates, the images on the collection end and the audience will be rendered accordingly to ensure that the image in the field of view is always facing up.

It only takes effect in the camera capture scene inside the SDK, and only takes effect on the mobile terminal.

1. This interface only works for the collection end. If you only watch the picture in the room, opening this interface is invalid.

2. When the capture device is rotated 90 degrees or 270 degrees, the picture seen by the capture device or the audience may be cropped to maintain proportional coordination.

Param | DESC |

mode | Gravity sensing mode, see TRTC_GRAVITY_SENSOR_ADAPTIVE_MODE_DISABLE、TRTC_GRAVITY_SENSOR_ADAPTIVE_MODE_FILL_BY_CENTER_CROP and TRTC_GRAVITY_SENSOR_ADAPTIVE_MODE_FIT_WITH_BLACK_BORDER for details, default value: TRTC_GRAVITY_SENSOR_ADAPTIVE_MODE_DISABLE. |

startLocalAudio

startLocalAudio

void startLocalAudio | (int quality) |

Enable local audio capturing and publishing

The SDK does not enable the mic by default. When a user wants to publish the local audio, the user needs to call this API to enable mic capturing and encode and publish the audio to the current room.

After local audio capturing and publishing is enabled, other users in the room will receive the onUserAudioAvailable(userId, true) notification.

Param | DESC |

quality | Sound quality TRTC_AUDIO_QUALITY_SPEECH - Smooth: sample rate: 16 kHz; mono channel; audio bitrate: 16 Kbps. This is suitable for audio call scenarios, such as online meeting and audio call. TRTC_AUDIO_QUALITY_DEFAULT - Default: sample rate: 48 kHz; mono channel; audio bitrate: 50 Kbps. This is the default sound quality of the SDK and recommended if there are no special requirements. TRTC_AUDIO_QUALITY_MUSIC - HD: sample rate: 48 kHz; dual channel + full band; audio bitrate: 128 Kbps. This is suitable for scenarios where Hi-Fi music transfer is required, such as online karaoke and music live streaming. |

Note

This API will check the mic permission. If the current application does not have permission to use the mic, the SDK will automatically ask the user to grant the mic permission.

stopLocalAudio

stopLocalAudio

Stop local audio capturing and publishing

After local audio capturing and publishing is stopped, other users in the room will receive the onUserAudioAvailable(userId, false) notification.

muteLocalAudio

muteLocalAudio

void muteLocalAudio | (boolean mute) |

Pause/Resume publishing local audio stream

After local audio publishing is paused, other users in the room will receive the onUserAudioAvailable(userId, false) notification.

After local audio publishing is resumed, other users in the room will receive the onUserAudioAvailable(userId, true) notification.

Different from stopLocalAudio,

muteLocalAudio(true) does not release the mic permission; instead, it continues to send mute packets with extremely low bitrate.This is very suitable for scenarios that require on-cloud recording, as video file formats such as MP4 have a high requirement for audio continuity, while an MP4 recording file cannot be played back smoothly if stopLocalAudio is used.

Therefore,

muteLocalAudio instead of stopLocalAudio is recommended in scenarios where the requirement for recording file quality is high.Param | DESC |

mute | true: mute; false: unmute |

muteRemoteAudio

muteRemoteAudio

void muteRemoteAudio | (String userId |

| boolean mute) |

Pause/Resume playing back remote audio stream

When you mute the remote audio of a specified user, the SDK will stop playing back the user's audio and pulling the user's audio data.

Param | DESC |

mute | true: mute; false: unmute |

userId | ID of the specified remote user |

Note

This API works when called either before or after room entry (enterRoom), and the mute status will be reset to

false after room exit (exitRoom).muteAllRemoteAudio

muteAllRemoteAudio

void muteAllRemoteAudio | (boolean mute) |

Pause/Resume playing back all remote users' audio streams

When you mute the audio of all remote users, the SDK will stop playing back all their audio streams and pulling all their audio data.

Param | DESC |

mute | true: mute; false: unmute |

Note

This API works when called either before or after room entry (enterRoom), and the mute status will be reset to

false after room exit (exitRoom).setAudioRoute

setAudioRoute

void setAudioRoute | (int route) |

Set audio route

Setting "audio route" is to determine whether the sound is played back from the speaker or receiver of a mobile device; therefore, this API is only applicable to mobile devices such as phones.

Generally, a phone has two speakers: one is the receiver at the top, and the other is the stereo speaker at the bottom.

If audio route is set to the receiver, the volume is relatively low, and the sound can be heard clearly only when the phone is put near the ear. This mode has a high level of privacy and is suitable for answering calls.

If audio route is set to the speaker, the volume is relatively high, so there is no need to put the phone near the ear. Therefore, this mode can implement the "hands-free" feature.

Param | DESC |

route | Audio route, i.e., whether the audio is output by speaker or receiver. Default value: TRTC_AUDIO_ROUTE_SPEAKER |

setRemoteAudioVolume

setRemoteAudioVolume

void setRemoteAudioVolume | (String userId |

| int volume) |

Set the audio playback volume of remote user

You can mute the audio of a remote user through

setRemoteAudioVolume(userId, 0) .Param | DESC |

userId | ID of the specified remote user |

volume | Volume. 100 is the original volume. Value range: [0,150]. Default value: 100 |

Note

If 100 is still not loud enough for you, you can set the volume to up to 150, but there may be side effects.

setAudioCaptureVolume

setAudioCaptureVolume

void setAudioCaptureVolume | (int volume) |

Set the capturing volume of local audio

Param | DESC |

volume | Volume. 100 is the original volume. Value range: [0,150]. Default value: 100 |

Note

If 100 is still not loud enough for you, you can set the volume to up to 150, but there may be side effects.

getAudioCaptureVolume

getAudioCaptureVolume

Get the capturing volume of local audio

setAudioPlayoutVolume

setAudioPlayoutVolume

void setAudioPlayoutVolume | (int volume) |

Set the playback volume of remote audio

This API controls the volume of the sound ultimately delivered by the SDK to the system for playback. It affects the volume of the recorded local audio file but not the volume of in-ear monitoring.

Param | DESC |

volume | Volume. 100 is the original volume. Value range: [0,150]. Default value: 100 |

Note

If 100 is still not loud enough for you, you can set the volume to up to 150, but there may be side effects.

getAudioPlayoutVolume

getAudioPlayoutVolume

Get the playback volume of remote audio

enableAudioVolumeEvaluation

enableAudioVolumeEvaluation

void enableAudioVolumeEvaluation | (boolean enable |

|

Enable volume reminder

After this feature is enabled, the SDK will return the audio volume assessment information of local user who sends stream and remote users in the onUserVoiceVolume callback of TRTCCloudListener.

Param | DESC |

enable | Whether to enable the volume prompt. It’s disabled by default. |

params |

Note

To enable this feature, call this API before calling

startLocalAudio .startAudioRecording

startAudioRecording

int startAudioRecording |

Start audio recording

After you call this API, the SDK will selectively record local and remote audio streams (such as local audio, remote audio, background music, and sound effects) into a local file.

This API works when called either before or after room entry. If a recording task has not been stopped through

stopAudioRecording before room exit, it will be automatically stopped after room exit.The startup and completion status of the recording will be notified through local recording-related callbacks. See TRTCCloud related callbacks for reference.

Param | DESC |

param |

Note

Since version 11.5, the results of audio recording have been changed to be notified through asynchronous callbacks instead of return values. Please refer to the relevant callbacks of TRTCCloud.

Return Desc:

0: success; -1: audio recording has been started; -2: failed to create file or directory; -3: the audio format of the specified file extension is not supported.

stopAudioRecording

stopAudioRecording

Stop audio recording

If a recording task has not been stopped through this API before room exit, it will be automatically stopped after room exit.

startLocalRecording

startLocalRecording

void startLocalRecording |

Start local media recording

This API records the audio/video content during live streaming into a local file.

Param | DESC |

params |

stopLocalRecording

stopLocalRecording

Stop local media recording

If a recording task has not been stopped through this API before room exit, it will be automatically stopped after room exit.

setRemoteAudioParallelParams

setRemoteAudioParallelParams

void setRemoteAudioParallelParams | (TRTCCloudDef.TRTCAudioParallelParams params) |

Set the parallel strategy of remote audio streams

For room with many speakers.

Param | DESC |

params | Audio parallel parameter. For more information, please see TRTCAudioParallelParams |

enable3DSpatialAudioEffect

enable3DSpatialAudioEffect

void enable3DSpatialAudioEffect | (boolean enabled) |

Enable 3D spatial effect

Enable 3D spatial effect. Note that TRTC_AUDIO_QUALITY_SPEECH smooth or TRTC_AUDIO_QUALITY_DEFAULT default audio quality should be used.

Param | DESC |

enabled | Whether to enable 3D spatial effect. It’s disabled by default. |

updateSelf3DSpatialPosition

updateSelf3DSpatialPosition

void updateSelf3DSpatialPosition | (int[] position |

| float[] axisForward |

| float[] axisRight |

| float[] axisUp) |

Update self position and orientation for 3D spatial effect

Update self position and orientation in the world coordinate system. The SDK will calculate the relative position between self and the remote users according to the parameters of this method, and then render the spatial sound effect. Note that the length of array should be 3.

Param | DESC |

axisForward | The unit vector of the forward axis of user coordinate system. The three values represent the forward, right and up coordinate values in turn. |

axisRight | The unit vector of the right axis of user coordinate system. The three values represent the forward, right and up coordinate values in turn. |

axisUp | The unit vector of the up axis of user coordinate system. The three values represent the forward, right and up coordinate values in turn. |

position | The coordinate of self in the world coordinate system. The three values represent the forward, right and up coordinate values in turn. |

Note

Please limit the calling frequency appropriately. It's recommended that the interval between two operations be at least 100ms.

updateRemote3DSpatialPosition

updateRemote3DSpatialPosition

void updateRemote3DSpatialPosition | (String userId |

| int[] position) |

Update the specified remote user's position for 3D spatial effect

Update the specified remote user's position in the world coordinate system. The SDK will calculate the relative position between self and the remote users according to the parameters of this method, and then render the spatial sound effect. Note that the length of array should be 3.

Param | DESC |

position | The coordinate of self in the world coordinate system. The three values represent the forward, right and up coordinate values in turn. |

userId | ID of the specified remote user. |

Note

Please limit the calling frequency appropriately. It's recommended that the interval between two operations of the same remote user be at least 100ms.

set3DSpatialReceivingRange

set3DSpatialReceivingRange

void set3DSpatialReceivingRange | (String userId |

| int range) |

Set the maximum 3D spatial attenuation range for userId's audio stream

After set the range, the specified user's audio stream will attenuate to zero within the range.

Param | DESC |

range | Maximum attenuation range of the audio stream. |

userId | ID of the specified user. |

getDeviceManager

getDeviceManager

Get device management class (TXDeviceManager)

getBeautyManager

getBeautyManager

Get beauty filter management class (TXBeautyManager)

You can use the following features with beauty filter management:

Set beauty effects such as "skin smoothing", "brightening", and "rosy skin".

Set face adjustment effects such as "eye enlarging", "face slimming", "chin slimming", "chin lengthening/shortening", "face shortening", "nose narrowing", "eye brightening", "teeth whitening", "eye bag removal", "wrinkle removal", and "smile line removal".

Set face adjustment effects such as "hairline", "eye distance", "eye corners", "mouth shape", "nose wing", "nose position", "lip thickness", and "face shape".

Set makeup effects such as "eye shadow" and "blush".

Set animated effects such as animated sticker and facial pendant.

setWatermark

setWatermark

void setWatermark | (Bitmap image |

| int streamType |

| float x |

| float y |

| float width) |

Add watermark

The watermark position is determined by the

rect parameter, which is a quadruple in the format of (x, y, width, height). x: X coordinate of watermark, which is a floating-point number between 0 and 1.

y: Y coordinate of watermark, which is a floating-point number between 0 and 1.

width: width of watermark, which is a floating-point number between 0 and 1.

height: it does not need to be set. The SDK will automatically calculate it according to the watermark image's aspect ratio.

Sample parameter:

If the encoding resolution of the current video is 540x960, and the

rect parameter is set to (0.1, 0.1, 0.2, 0.0),then the coordinates of the top-left point of the watermark will be (540 * 0.1, 960 * 0.1), i.e., (54, 96), the watermark width will be 540 * 0.2 = 108 px, and the watermark height will be calculated automatically by the SDK based on the watermark image's aspect ratio.

Param | DESC |

image | Watermark image, which must be a PNG image with transparent background |

rect | Unified coordinates of the watermark relative to the encoded resolution. Value range of x , y , width , and height : 0–1. |

streamType | Specify for which image to set the watermark. For more information, please see TRTCVideoStreamType. |

Note

If you want to set watermarks for both the primary image (generally for the camera) and the substream image (generally for screen sharing), you need to call this API twice with

streamType set to different values.getAudioEffectManager

getAudioEffectManager

Get sound effect management class (TXAudioEffectManager)

TXAudioEffectManager is a sound effect management API, through which you can implement the following features: Background music: both online music and local music can be played back with various features such as speed adjustment, pitch adjustment, original voice, accompaniment, and loop.

In-ear monitoring: the sound captured by the mic is played back in the headphones in real time, which is generally used for music live streaming.

Reverb effect: karaoke room, small room, big hall, deep, resonant, and other effects.

Voice changing effect: young girl, middle-aged man, heavy metal, and other effects.

Short sound effect: short sound effect files such as applause and laughter are supported (for files less than 10 seconds in length, please set the

isShortFile parameter to true ).startSystemAudioLoopback

startSystemAudioLoopback

Enable system audio capturing

This API captures audio data from another app and mixes it into the current audio stream of the SDK. This ensures that other users in the room hear the audio played back by the another app.

In online education scenarios, a teacher can use this API to have the SDK capture the audio of instructional videos and broadcast it to students in the room.

In live music scenarios, an anchor can use this API to have the SDK capture the music played back by his or her player so as to add background music to the room.

Note

1. This interface only works on Android API 29 and above.

2. You need to use this interface to enable system sound capture first, and it will take effect only when you call startScreenCapture to enable screen sharing.

3. You need to add a foreground service to ensure that the system sound capture is not silenced, and set android:foregroundServiceType="mediaProjection".

4. The SDK only capture audio of applications that satisfies the capture strategy and audio usage. Currently, the audio usage captured by the SDK includes USAGE_MEDIA, USAGE_GAME。

stopSystemAudioLoopback

stopSystemAudioLoopback

Stop system audio capturing(iOS not supported)

startScreenCapture

startScreenCapture

void startScreenCapture | (int streamType |

| |

|

Start screen sharing

This API supports capturing the screen of the entire Android system, which can implement system-wide screen sharing similar to VooV Meeting.

Resolution (videoResolution): 1280x720

Frame rate (videoFps): 10 fps

Bitrate (videoBitrate): 1200 Kbps

Resolution adaption (enableAdjustRes): false

Param | DESC |

encParams | Encoding parameters. For more information, please see TRTCCloudDef#TRTCVideoEncParam. If encParams is set to null , the SDK will automatically use the previously set encoding parameter. |

shareParams | For more information, please see TRTCCloudDef#TRTCScreenShareParams. You can use the floatingView parameter to pop up a floating window (you can also use Android's WindowManager parameter to configure automatic pop-up). |

stopScreenCapture

stopScreenCapture

Stop screen sharing

pauseScreenCapture

pauseScreenCapture

Pause screen sharing

Note

Begin from v11.5 version, paused screen capture will use the last frame to output at a frame rate of 1fps.

resumeScreenCapture

resumeScreenCapture

Resume screen sharing

setSubStreamEncoderParam

setSubStreamEncoderParam

void setSubStreamEncoderParam |

Set the video encoding parameters of screen sharing (i.e., substream) (for desktop and mobile systems)

This API can set the image quality of screen sharing (i.e., the substream) viewed by remote users, which is also the image quality of screen sharing in on-cloud recording files.

Please note the differences between the following two APIs:

setVideoEncoderParam is used to set the video encoding parameters of the primary stream image (TRTCVideoStreamTypeBig, generally for camera).

setSubStreamEncoderParam is used to set the video encoding parameters of the substream image (TRTCVideoStreamTypeSub, generally for screen sharing).

Param | DESC |

param |

enableCustomVideoCapture

enableCustomVideoCapture

void enableCustomVideoCapture | (int streamType |

| boolean enable) |

Enable/Disable custom video capturing mode

After this mode is enabled, the SDK will not run the original video capturing process (i.e., stopping camera data capturing and beauty filter operations) and will retain only the video encoding and sending capabilities.

Param | DESC |

enable | Whether to enable. Default value: false |

streamType | Specify video stream type (TRTCVideoStreamTypeBig: HD big image; TRTCVideoStreamTypeSub: substream image). |

sendCustomVideoData

sendCustomVideoData

void sendCustomVideoData | (int streamType |

|

Deliver captured video frames to SDK

You can use this API to deliver video frames you capture to the SDK, and the SDK will encode and transfer them through its own network module.

There are two delivery schemes for Android:

Memory-based delivery scheme: its connection is easy but its performance is poor, so it is not suitable for scenarios with high resolution.

Video memory-based delivery scheme: its connection requires certain knowledge in OpenGL, but its performance is good. For resolution higher than 640x360, please use this scheme.

Param | DESC |

frame | Video data. If the memory-based delivery scheme is used, please set the data field; if the video memory-based delivery scheme is used, please set the TRTCTexture field. For more information, please see com::tencent::trtc::TRTCCloudDef::TRTCVideoFrame TRTCVideoFrame. |

streamType | Specify video stream type (TRTCVideoStreamTypeBig: HD big image; TRTCVideoStreamTypeSub: substream image). |

Note

1. We recommend you call the generateCustomPTS API to get the

timestamp value of a video frame immediately after capturing it, so as to achieve the best audio/video sync effect.2. The video frame rate eventually encoded by the SDK is not determined by the frequency at which you call this API, but by the FPS you set in setVideoEncoderParam.

3. Please try to keep the calling interval of this API even; otherwise, problems will be caused, such as unstable output frame rate of the encoder or out-of-sync audio/video.

enableCustomAudioCapture

enableCustomAudioCapture

void enableCustomAudioCapture | (boolean enable) |

Enable custom audio capturing mode

After this mode is enabled, the SDK will not run the original audio capturing process (i.e., stopping mic data capturing) and will retain only the audio encoding and sending capabilities.

Param | DESC |

enable | Whether to enable. Default value: false |

Note

As acoustic echo cancellation (AEC) requires strict control over the audio capturing and playback time, after custom audio capturing is enabled, AEC may fail.

sendCustomAudioData

sendCustomAudioData

void sendCustomAudioData |

Deliver captured audio data to SDK

We recommend you enter the following information for the TRTCAudioFrame parameter (other fields can be left empty):

audioFormat: audio data format, which can only be

TRTCAudioFrameFormatPCM . data: audio frame buffer. Audio frame data must be in PCM format, and it supports a frame length of 5–100 ms (20 ms is recommended). Length calculation method: for example, if the sample rate is 48000, then the frame length for mono channel will be `48000 * 0.02s * 1 * 16 bit = 15360 bit = 1920 bytes`.

sampleRate: sample rate. Valid values: 16000, 24000, 32000, 44100, 48000.

channel: number of channels (if stereo is used, data is interwoven). Valid values: 1: mono channel; 2: dual channel.

timestamp (ms): Set it to the timestamp when audio frames are captured, which you can obtain by calling generateCustomPTS after getting a audio frame.

Param | DESC |

frame | Audio data |

Note

Please call this API accurately at intervals of the frame length; otherwise, sound lag may occur due to uneven data delivery intervals.

enableMixExternalAudioFrame

enableMixExternalAudioFrame

void enableMixExternalAudioFrame | (boolean enablePublish |

| boolean enablePlayout) |

Enable/Disable custom audio track

After this feature is enabled, you can mix a custom audio track into the SDK through this API. With two boolean parameters, you can control whether to play back this track remotely or locally.

Param | DESC |

enablePlayout | Whether the mixed audio track should be played back locally. Default value: false |

enablePublish | Whether the mixed audio track should be played back remotely. Default value: false |

Note

If you specify both

enablePublish and enablePlayout as false , the custom audio track will be completely closed.mixExternalAudioFrame

mixExternalAudioFrame

int mixExternalAudioFrame |

Mix custom audio track into SDK

Before you use this API to mix custom PCM audio into the SDK, you need to first enable custom audio tracks through enableMixExternalAudioFrame.

You are expected to feed audio data into the SDK at an even pace, but we understand that it can be challenging to call an API at absolutely regular intervals.

Given this, we have provided a buffer pool in the SDK, which can cache the audio data you pass in to reduce the fluctuations in intervals between API calls.

The value returned by this API indicates the size (ms) of the buffer pool. For example, if

50 is returned, it indicates that the buffer pool has 50 ms of audio data. As long as you call this API again within 50 ms, the SDK can make sure that continuous audio data is mixed.If the value returned is

100 or greater, you can wait after an audio frame is played to call the API again. If the value returned is smaller than 100 , then there isn’t enough data in the buffer pool, and you should feed more audio data into the SDK until the data in the buffer pool is above the safety level.

data : audio frame buffer. Audio frames must be in PCM format. Each frame can be 5-100 ms (20 ms is recommended) in duration. Assume that the sample rate is 48000, and sound channels mono-channel. Then the frame size would be 48000 x 0.02s x 1 x 16 bit = 15360 bit = 1920 bytes. sampleRate : sample rate. Valid values: 16000, 24000, 32000, 44100, 48000 channel : number of sound channels (if dual-channel is used, data is interleaved). Valid values: 1 (mono-channel); 2 (dual channel) timestamp : timestamp (ms). Set it to the timestamp when audio frames are captured, which you can obtain by calling generateCustomPTS after getting an audio frame.Param | DESC |

frame | Audio data |

Return Desc:

If the value returned is

0 or greater, the value represents the current size of the buffer pool; if the value returned is smaller than 0 , it means that an error occurred. -1 indicates that you didn’t call enableMixExternalAudioFrame to enable custom audio tracks.setMixExternalAudioVolume

setMixExternalAudioVolume

void setMixExternalAudioVolume | (int publishVolume |

| int playoutVolume) |

Set the publish volume and playback volume of mixed custom audio track

Param | DESC |

playoutVolume | set the play volume,from 0 to 100, -1 means no change |

publishVolume | set the publish volume,from 0 to 100, -1 means no change |

generateCustomPTS

generateCustomPTS

Generate custom capturing timestamp

This API is only suitable for the custom capturing mode and is used to solve the problem of out-of-sync audio/video caused by the inconsistency between the capturing time and delivery time of audio/video frames.

When you call APIs such as sendCustomVideoData or sendCustomAudioData for custom video or audio capturing, please use this API as instructed below:

1. First, when a video or audio frame is captured, call this API to get the corresponding PTS timestamp.

2. Then, send the video or audio frame to the preprocessing module you use (such as a third-party beauty filter or sound effect component).

3. When you actually call sendCustomVideoData or sendCustomAudioData for delivery, assign the PTS timestamp recorded when the frame was captured to the

timestamp field in TRTCVideoFrame or TRTCAudioFrame.Return Desc:

Timestamp in ms

setLocalVideoProcessListener

setLocalVideoProcessListener

int setLocalVideoProcessListener | (int pixelFormat |

| int bufferType |

|

Set video data callback for third-party beauty filters

After this callback is set, the SDK will call back the captured video frames through the

listener you set and use them for further processing by a third-party beauty filter component. Then, the SDK will encode and send the processed video frames.Param | DESC |

bufferType | Specify the format of the data called back. Currently, it supports: TRTC_VIDEO_BUFFER_TYPE_TEXTURE: suitable when pixelFormat is set to TRTC_VIDEO_PIXEL_FORMAT_Texture_2D. TRTC_VIDEO_BUFFER_TYPE_BYTE_BUFFER: suitable when pixelFormat is set to TRTC_VIDEO_PIXEL_FORMAT_I420. TRTC_VIDEO_BUFFER_TYPE_BYTE_ARRAY: suitable when pixelFormat is set to TRTC_VIDEO_PIXEL_FORMAT_I420. |

listener | |

pixelFormat | Specify the format of the pixel called back. Currently, it supports: TRTC_VIDEO_PIXEL_FORMAT_Texture_2D: video memory-based texture scheme. TRTC_VIDEO_PIXEL_FORMAT_I420: memory-based data scheme. |

Return Desc:

0: success; values smaller than 0: error

setLocalVideoRenderListener

setLocalVideoRenderListener

int setLocalVideoRenderListener | (int pixelFormat |

| int bufferType |

|

Set the callback of custom rendering for local video

After this callback is set, the SDK will skip its own rendering process and call back the captured data. Therefore, you need to complete image rendering on your own.

pixelFormat specifies the format of the data called back. Currently, Texture2D, I420, and RGBA formats are supported. bufferType specifies the buffer type. BYTE_BUFFER is suitable for the JNI layer, while BYTE_ARRAY can be used in direct operations at the Java layer.

Param | DESC |

bufferType | Specify the data structure of the video frame: TRTC_VIDEO_BUFFER_TYPE_TEXTURE: suitable when pixelFormat is set to TRTC_VIDEO_PIXEL_FORMAT_Texture_2D. TRTC_VIDEO_BUFFER_TYPE_BYTE_BUFFER: suitable when pixelFormat is set to TRTC_VIDEO_PIXEL_FORMAT_I420 or TRTC_VIDEO_PIXEL_FORMAT_RGBA. TRTC_VIDEO_BUFFER_TYPE_BYTE_ARRAY: suitable when pixelFormat is set to TRTC_VIDEO_PIXEL_FORMAT_I420 or TRTC_VIDEO_PIXEL_FORMAT_RGBA. |

listener | Callback of custom video rendering. The callback is returned once for each video frame |

pixelFormat | Specify the format of the video frame, such as: TRTC_VIDEO_PIXEL_FORMAT_Texture_2D: OpenGL texture format, which is suitable for GPU processing and has a high processing efficiency. TRTC_VIDEO_PIXEL_FORMAT_I420: standard I420 format, which is suitable for CPU processing and has a poor processing efficiency. TRTC_VIDEO_PIXEL_FORMAT_RGBA: RGBA format, which is suitable for CPU processing and has a poor processing efficiency. |

Return Desc:

0: success; values smaller than 0: error

setRemoteVideoRenderListener

setRemoteVideoRenderListener

int setRemoteVideoRenderListener | (String userId |

| int pixelFormat |

| int bufferType |

|

Set the callback of custom rendering for remote video

After this callback is set, the SDK will skip its own rendering process and call back the captured data. Therefore, you need to complete image rendering on your own.

pixelFormat specifies the format of the called back data, such as NV12, I420, and 32BGRA. bufferType specifies the buffer type. PixelBuffer has the highest efficiency, while NSData makes the SDK perform a memory conversion internally, which will result in extra performance loss.

Param | DESC |

bufferType | Specify video data structure type. |

listener | listen for custom rendering |

pixelFormat | Specify the format of the pixel called back |

userId | ID of the specified remote user |

Note

Before this API is called,

startRemoteView(nil) needs to be called to get the video stream of the remote user ( view can be set to nil for this end); otherwise, there will be no data called back.Return Desc:

0: success; values smaller than 0: error

setAudioFrameListener

setAudioFrameListener

void setAudioFrameListener |

Set custom audio data callback

After this callback is set, the SDK will internally call back the audio data (in PCM format), including: