What is Jitter and How to use Jitter Buffer to reduce jitter?

Have you ever experienced the frustrating situations of instability, buffering, decreased quality, or audio-video desynchronization during video calls or while watching live broadcasts? It's understandable that you might feel compelled to refresh the page repeatedly or become disheartened and exit the application. These issues could indeed be caused by network jitter.

As professional audio-video developers, it is crucial for us to understand the causes of jitter and find solutions in order to provide users with high-quality audio-video calling services and live streaming experiences.

What is jitter

First, let's understand what data packets are. After capturing audio-video data, the sender encodes and encapsulates this data into a series of small data packets, which are then transmitted over the network to the receiver. Upon receiving the data packets, the receiver performs operations such as unpacking and decoding, and finally delivers the processed audio data to the player for playback.

In real-time audio-video transmission, due to network latency, packet loss, bandwidth limitations, and other factors, data packets may experience variable transmission speeds over the network. This can result in unstable arrival times of the packets at the receiver, leading to jitter.

Causes of jitter

Jitter is typically associated with the instability in network transmission and playback processes. Here are some possible causes of jitter:

- Network latency: During real-time audio-video transmission, data packets may encounter varying degrees of delay while being transmitted over the network. This can result in unstable arrival times of the packets, leading to jitter.

- Packet loss: Packet loss in the network environment can cause certain video frames to fail to reach the receiver, resulting in jitter phenomena such as frame skipping or freezing.

- BandWidth limitations: When the network bandwidth is insufficient to support high-quality data transmission, it can result in unstable transmission speeds of data packets, leading to jitter.

- Codec performance: The performance of the codec can also affect jitter. If the codec's processing speed is insufficient to keep up with the transmission speed of data packets, it can result in stuttering or frame skipping phenomena.

- Player issues: If the player is unable to maintain a stable playback speed while processing video frames, it can also lead to video jitter.

Measurement of jitter

Measuring network jitter primarily involves calculating the extent of fluctuations in packet arrival times. Here are some commonly used methods to measure jitter:

- Mean Jitter: Calculate the time difference between consecutive packets' arrival times and compute the average of these time differences. Mean jitter reflects the overall fluctuation of network latency but may not accurately describe instantaneous jitter phenomena.

- Jitter Variance: Calculate the time difference between consecutive packets' arrival times and then compute the variance of these time differences. Jitter variance reflects the degree of network latency fluctuations, where a higher value indicates more severe jitter.

- Maximum Jitter: Calculate the time difference between consecutive packets' arrival times and identify the largest time difference. Maximum jitter reflects the maximum fluctuation of network latency but may be influenced by extreme values.

- Percentile Jitter: Calculate the time difference between consecutive packets' arrival times and then compute a specific percentile value (e.g., 95th percentile) of these time differences. Percentile jitter reflects the fluctuation of network latency while reducing the impact of extreme values.

- End-to-End Delay: Calculate the total delay between the sender and receiver. This metric encompasses various factors such as network latency, processing delay, etc., providing a comprehensive reflection of the impact of jitter on communication quality.

In practical applications, it is possible to choose appropriate methods to measure jitter based on specific scenarios and requirements. Additionally, it is possible to evaluate network conditions and communication quality by combining other network metrics such as packet loss rate, bandwidth, etc.

How to Reduce jitter

Due to the real-time and temporal nature of audio and video, jitter has a significant impact on the quality of audio and video. To reduce jitter, the following technical means and strategies can be employed:

- Frame dropping strategy: When the network conditions are poor, selectively discarding non-key frames can be an option to lower quality but maintain smoothness.

- Frame synchronization algorithm: Predict and adjust the arrival time of data frames through algorithms to maintain a stable interval during playback.

- Adaptive bitrate adjustment: Dynamically adjust the video bitrate based on network conditions to adapt to different bandwidth environments and reduce the likelihood of jitter.

- Jitter buffer: Set up a jitter buffer at the receiving end to store and adjust the sequence of received data packets, reducing jitter during playback.

- Optimizing codec performance: Choose higher-performing codecs or optimize the performance of existing codecs to improve processing speed and reduce stuttering or frame skipping.

- Network optimization: Implement measures at the network level, such as selecting better transport protocols and optimizing network routing, to reduce network latency and packet loss, improving data transmission stability.

- Player Buffering: Set up a buffer at the player end to cache received data. This provides some tolerance in playback time, reducing jitter.

- Optimizing player performance: Optimize the internal processing flow of the player to ensure that it can play data frames at a stable speed, thereby reducing jitter.

Jitter Buffer

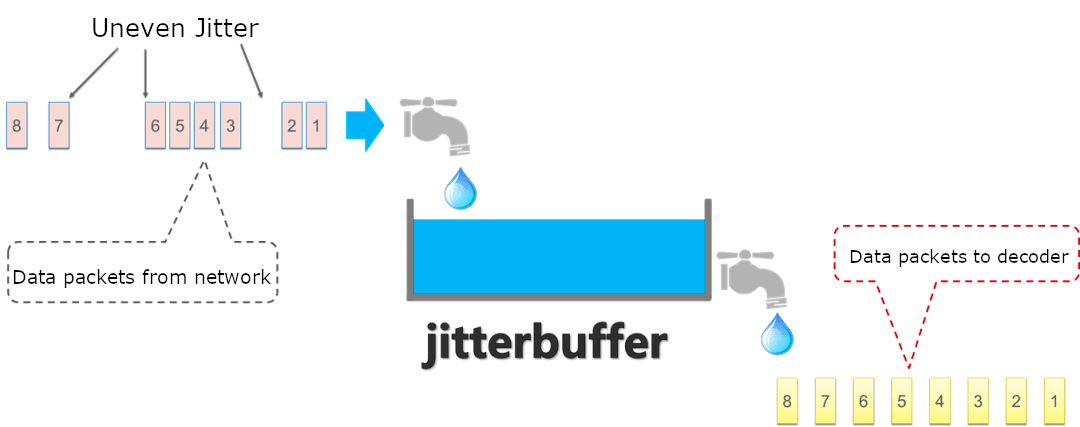

Let's focus on introducing the Jitter Buffer. The Jitter Buffer is a technique used to reduce jitter in real-time audio and video transmission. By setting up a buffer at the receiving end, it stores and adjusts the sequence of received data packets, thereby reducing jitter during playback.

In simple terms, the Jitter Buffer acts as a reservoir that buffers uneven data packets from the network before evenly delivering them to the decoder for decoding.

To achieve this goal, different algorithms can be used to control the behavior of the Jitter Buffer. Here are some common Jitter Buffer algorithms:

- Fixed-size Jitter Buffer: This is the simplest algorithm where a fixed size is set for the Jitter Buffer (i.e., the number of data packets it can store). This algorithm is easy to implement but may not adapt well to changing network conditions.

- Adaptive Jitter Buffer: This algorithm dynamically adjusts the size of the Jitter Buffer based on network conditions. For example, when significant network latency fluctuations are detected, the Jitter Buffer size can be increased to reduce the likelihood of packet loss. Conversely, when the network conditions are good, the Jitter Buffer size can be reduced to minimize playback delay.

- Delay prediction-based Jitter Buffer: This algorithm determines when to retrieve packets from the Jitter Buffer for playback by predicting the delay of received packets.

- Packet loss rate-based Jitter Buffer: This algorithm adjusts the size of the Jitter Buffer based on the packet loss rate at the receiving end. When the packet loss rate is high, the Jitter Buffer size can be increased to reduce the likelihood of packet loss. Conversely, when the packet loss rate is low, the Jitter Buffer size can be reduced to lower playback delay.

Jitter Buffer in WebRTC

WebRTC (Web Real-Time Communication) is an open-source project aimed at providing simple and efficient real-time audio and video communication capabilities for web browsers and mobile applications. Through JavaScript APIs and related network protocols, WebRTC enables developers to implement real-time audio and video communication directly in web pages without the need for any plugins or third-party software.

In WebRTC, the implementation of the Jitter Buffer relies on several key components:

- RTP (Real-Time Transport Protocol): WebRTC uses RTP for the transmission of audio and video data. RTP packets contain important information such as sequence numbers and timestamps, which are crucial for the implementation of the Jitter Buffer as they help determine the packet order and playback timing.

- RTCP (Real-Time Control Protocol): WebRTC utilizes RTCP to collect and transmit statistical information about network conditions, such as packet loss rate and latency. This information can guide the behavior of the Jitter Buffer, such as dynamically adjusting the buffer size.

- NACK (Negative Acknowledgement): NACK is a mechanism used to request retransmission of lost packets. In WebRTC, if the Jitter Buffer detects a missing packet, it can send a NACK message to request the sender to retransmit that packet. This helps reduce jitter.

- Media Stream Processing: The media stream processing module in WebRTC is responsible for decoding, depacketizing, and playing received RTP packets. During this process, the Jitter Buffer sorts and adjusts the packets based on their sequence numbers and timestamps to ensure the correct playback order and timing.

Specifically, the implementation of Jitter Buffer in WebRTC can be divided into the following steps:

- Receive RTP packets: Upon receiving RTP packets, WebRTC stores them in the Jitter Buffer.

- Packet sorting: Sort the packets based on their sequence numbers and timestamps to ensure the correct playback order.

- Buffer management: Dynamically adjust the size of the Jitter Buffer based on network conditions such as latency and packet loss rate. This can be achieved using statistical information collected through RTCP.

- Packet loss handling: If a missing packet is detected, a NACK message can be sent to request the sender to retransmit that packet.

- Packet retrieval: Retrieve packets from the Jitter Buffer for decoding and playback based on their timestamps and the current playback time.

- Synchronization handling: In cases of audio-video synchronization, it may be necessary to adjust the behavior of the Jitter Buffer based on the latency difference between audio and video to attempt synchronization recovery.

Through the above steps, WebRTC implements a robust Jitter Buffer that effectively reduces jitter phenomena and improves the quality of real-time audio and video communication.

Finally, let me briefly introduce NetEQ, which is one of the core technologies in WebRTC, standing for Network Equalizer. NetEQ essentially functions as the audio Jitter Buffer, utilizing adaptive jitter buffering algorithms. The buffering delay can be continuously optimized based on network conditions. Its primary goal is to ensure smooth playback of incoming audio packets from the network while maintaining the lowest possible latency. In addition, NetEQ integrates packet loss concealment algorithms and seamlessly integrates with decoders, ensuring good voice quality even in high packet loss environments.

Using Tencent RTC

Tencent Real-Time Communication (TRTC) is a cloud computing service provided by Tencent Cloud for real-time audio and video communication and interactive live streaming. TRTC offers a powerful set of APIs and SDKs that can be used to build various real-time audio and video applications, such as video conferencing, online education, interactive live streaming, and more.

Regarding the jitter issue in the real-time audio and video domain, Tencent RTC offers the following advantages:

- Dynamic bitrate adjustment: Tencent RTC can dynamically adjust the bitrate of audio and video based on network conditions and device performance, adapting to different network environments and reducing the likelihood of jitter.

- Efficient Jitter Buffer: Tencent RTC implements an efficient audio and video Jitter Buffer, which dynamically adjusts the buffer size and packet retrieval strategy based on network conditions, reducing jitter phenomena.

- Optimized audio and video codecs: Tencent RTC supports multiple audio and video codecs that have been optimized to enhance encoding and decoding performance, reducing issues like freezing or frame skipping.

- Network optimization: Leveraging Tencent Cloud's extensive network resources and optimization technologies such as intelligent route selection and Quality of Service (QoS), Tencent RTC reduces network latency and packet loss rate, thereby improving the stability of audio and video transmission.

Conclusion

Developers can easily minimize jitter and achieve outstanding audio-video experiences, even in challenging network environments, using Tencent RTC. Sign up for free and rapidly create your own audio-video application.

If you have any questions or need assistance, our support team is always ready to help. Please feel free to Contact Us or join us in Discord.